Good news for languages with few resources!

Pre-trained Basque monolingual and multilingual language models have proven to be very useful in NLP tasks for Basque!

Even they have been created with a 500 times smaller corpus than the English one and with a 80 times smaller wikipedia.

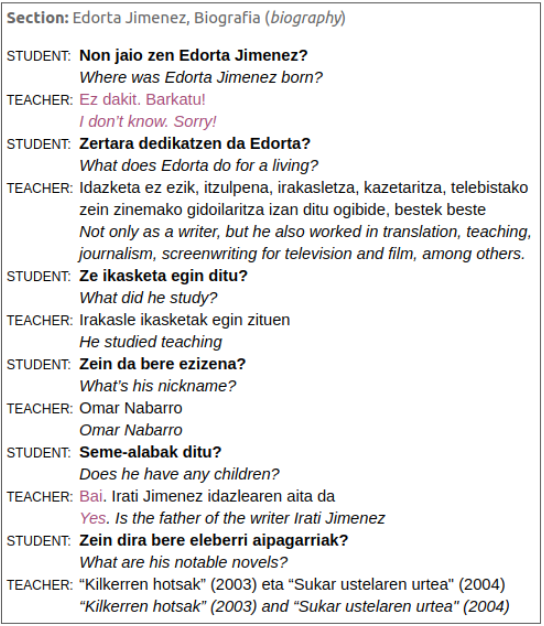

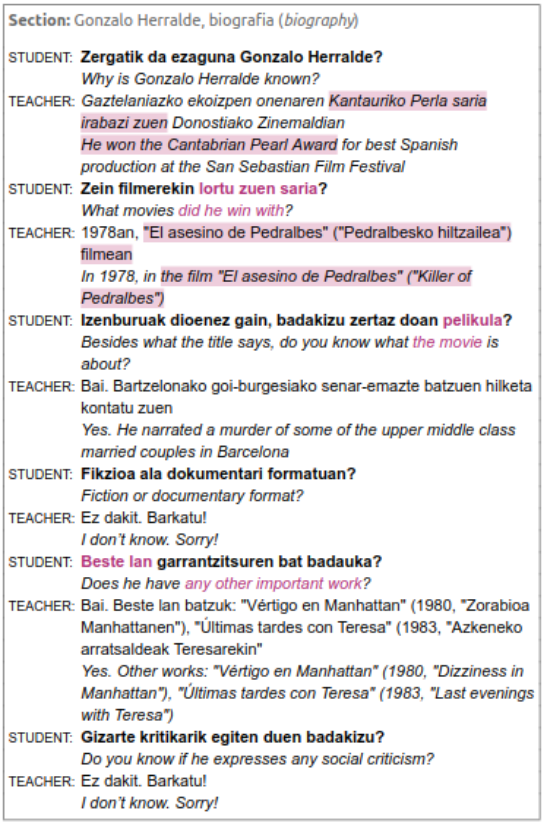

An example of Conversational Question Answering, and its transcription to English.

Word embeddings and pre-trained language models allow to build rich representations of text and have enabled improvements across

most NLP tasks. Unfortunately they are very expensive to train, and many small companies and research groups tend to use models that have been pre-trained and made available by third parties, rather than building their own. This is suboptimal as, for many languages, the models have been trained on smaller (or lower quality) corpora. In addition, monolingual pre-trained models for non-English languages are not always available. At best, models for those languages are included in multilingual versions, where each language shares the quota of substrings and parameters with the rest of the languages. This is particularly true for smaller languages such as Basque.

Last April we show that a number of monolingual models (FastText word embeddings, FLAIR and BERT language models) trained with larger Basque corpora (crawled news articles from online newspapers) produced much better results than publicly available versions in downstream NLP tasks, including topic classification, sentiment classification, PoS tagging and NER. this work was presented in the paper entitled “Give your Text Representation Models some Love: the Case for Basque“. The composition of the Basque Media Corpus (BMC) used in that experiment was as follows:

| Source | Text type | Million tokens |

|---|---|---|

| Basque Wikipedia | Enciclopedia | 35M |

| Berria newspaper | News | 81M |

| EiTB | News | 28M |

| Argia magazine | News | 16M |

| Local news sites | News | 224.6M |

Take into account that the original BERT language model for English was trained using Google books corpus that contains 155 billion words in American English, 34 billion words in British English. The English corpus is almost 500 times bigger than the Basque one.

Agerri

San Vicente

Campos

Otegi

Barrena

Saralegi

Soroa

E. Agirre

An example of a dialogue where there are many references in the questions to previous answers in the dialogue.

Now, in September we have published IXAmBERT, a multilingual language model pretrained for English, Spanish and Basque. And we have successfully experimented with it in a Basque Conversational Question Answering system. This transfer experiments could be already performed with Google’s official mBERT model, but as it covers that many languages, Basque is not very well represented. In order to create this new multilingual model that contains just English, Spanish and Basque, we have followed the same configuration as in the BERTeus model presented in April. We re-use the same corpus of the monolingual Basque model and add the English and Spanish Wikipedia with 2.5G and 650M tokens respectively. The size of these wikipedias is 80 and 20 times bigger than the Basque one.

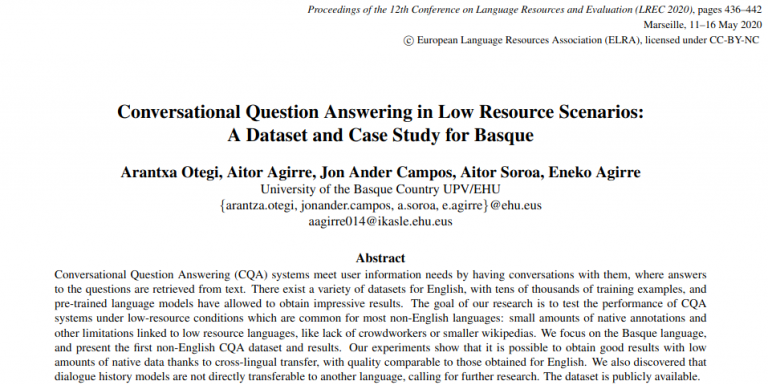

The good news is that this model has been successfully used to transfer knowledge from English to Basque in a conversational Question/Answering system, as reported in the paper Conversational Question Answering in Low Resource Scenarios: A Dataset and Case Study for Basque. In the paper, the new language model called IXAmBERT performed better than mBERT when transferring knowledge from English to Basque, as shown in the following table:

| Model | Zero-shot | Transfer learning |

|---|---|---|

| Baseline | 28.7 | 28.7 |

| mBERT | 31.5 | 37.4 |

| IXAmBERT | 38.9 | 41.2 |

| mBERT + history | 33.3 | 28.7 |

| IXAmBERT + history | 40.7 | 40.0 |

This table shows the results on a Basque Conversational Question Answering (CQA) dataset. Zero-shot means that the model is fine-tuned using using QuaC, an English CQA dataset. In the Transfer Learning setting the model is first fine-tuned on QuaC, and then on a Basque CQA dataset.

These works set a new state-of-the-art in those tasks for Basque.

All benchmarks and models used in this work are publicly available: https://huggingface.co/ixa-ehu/ixambert-base-cased

Leave a Reply